| Tasty byte-size provocations to refuel your thinking! | Brought to you by: |

Playing with AI: Approximating Expertise at Coding

I find offering an opinion on artificial intelligence a daunting prospect, since I must disclaim any expertise in the field. My work with AI so far has been restricted to trying to detect its presence in written assessment, and the use of publicly available tools to do work that I find tedious or difficult. As a result, the following is a product more of imagination than experience and reflects my interest in applying AI productively to research in general and analysis in particular. The examples I provide reflect my own interests and experience with a particular application to computer-assisted research, but I believe that principles identified can apply more generally.

Computer-assisted musicology is much more accessible now than in the past. The free availability of tools such as Michael Cuthbert’s Music21 toolkit, a set of extensions to the Python programming language, provides a ready means of conducting musicological research from the computer keyboard. These tools do not solve everything, since scholars will still need to find or prepare digitized versions of the desired repertoire. The biggest obstacle to wider adoption of digital techniques, however, is a reluctance to write code. I have observed this at work when teaching an introductory unit in digital musicology at the University of New England: students find the prospect of writing code daunting and will at times leave the unit rather than engage, despite coding playing almost no part in the assessment.

Similar to my students, someone interested in digital musicology but with limited coding experience is nonetheless able to imagine queries, to think of questions one might ask of a computer analysis program. AI makes it possible to start the process of designing these sorts of systems without the need for advanced programming expertise. Further, taking AI-generated code as a starting point is a potentially effective way to develop one’s own code reading and writing skills.

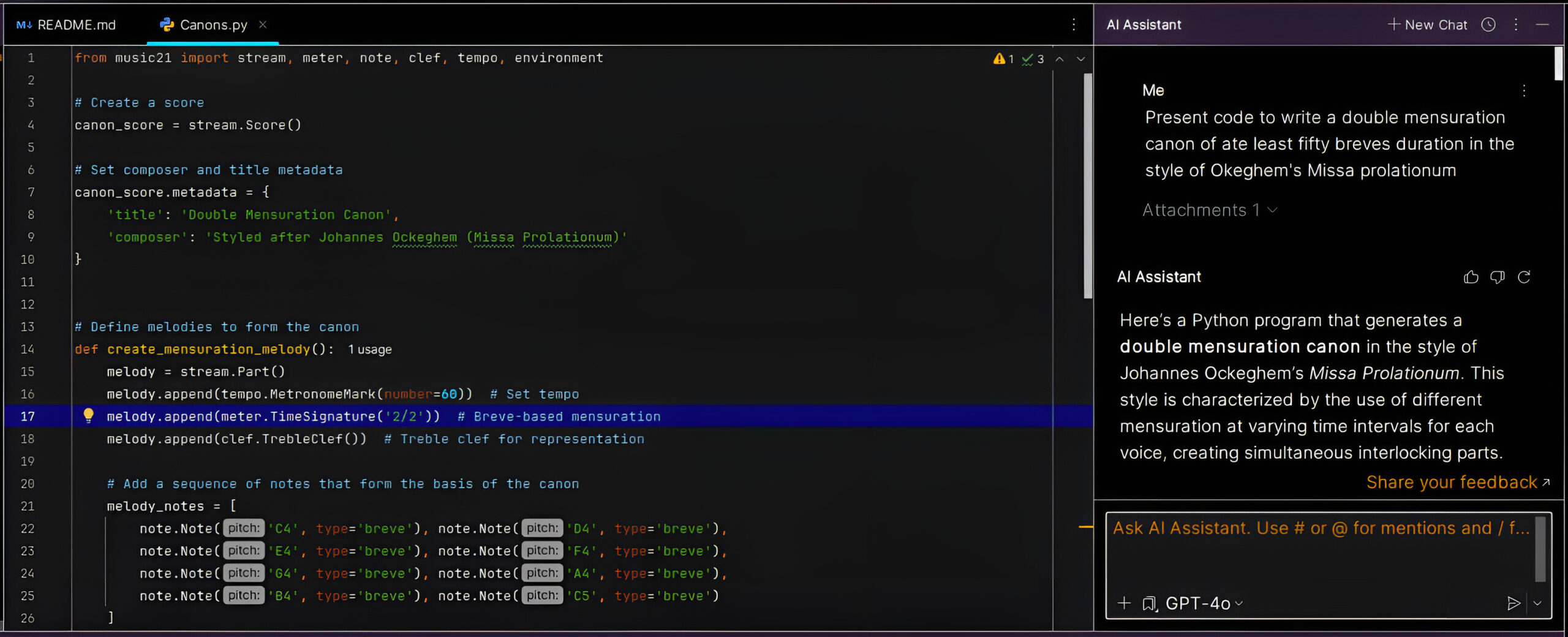

To illustrate this point, I gave the following deliberately imprecise prompt to the AI assistant in my coding app (figure 1): “Present code to write a double mensuration canon of at least fifty breves duration in the style of Ockeghem’s Missa prolationum.” In response, the AI correctly identified that it could use the Music21 toolkit and produced code that ran with only minor corrections, successfully displaying the “composition” in Sibelius.

As figure 2 shows, AI has no real understanding of what a double mensuration canon is: it provides two voices rather than four and the voices remain synchronized throughout. Only the approximate idea of a grouping of three against a grouping of two remains. This result is satisfying in a way since the problem lies not in obtaining working code but in finding the instructions that will produce code that solves the right problem. This in turn suggests a pleasingly symbiotic relationship: the AI needs me to explain mensuration canon clearly and thoroughly as much as I need the AI to generate the code.

Two major points emerge from this experiment. First, AI will generate code that more-or-less works. This is a special boon to nervous coders, since it allows their first interactions with coding to be editorial rather than generative; just as most people find it easier to edit than to write, newcomers to digital musicology can begin by responding to a short program written in response to their own brief and explore why it does or doesn’t work or, most likely, why it does something different from what they thought they had requested. Newcomers can thus respond to something generated by the AI rather than being expected to fill a blank page with code, and the more easily a researcher can create code that does something, the more likely that researcher is to add digital methods to their toolkit.

As an aside, preparing a sound prompt to an AI is an art form of its own. It depends on clarity, precision, and the ability to break complex concepts down into simple components—features it shares, incidentally, with good programming practice. Writing a good prompt therefore both develops and tests the sorts of skills desirable in a digital musicologist based on their knowledge of their own topic. In this sense, AI can help train the skills needed to get the most out of AI.

The second major point to emerge from the experiment is the way AI enables initial exploration of complex problems. I am not a programmer. I am a musicologist, and wish to expend my limited mental energy on musicological questions, not program syntax. Example experiments like the one above allow me to test the waters, to explore the feasibility of a given approach without having to design and build a complete program to do so. Even with the lack of scoping in the example, I emerge from the experiment better informed on how to proceed. If I were trying to develop a program to write a double mensuration canon, I am now more aware of where precise instruction is required, or perhaps I understand what parts of the program I might have to right myself and which could be left to the AI. Iterative refinement might not lead to a canon-writing program, but it would, I expect, tell me whether such a program were possible and which parameters were required.

AI’s use as an exploratory tool and a means of self-training becomes important when thinking on larger scales. In a hypothetical multi-disciplinary research project, the musicologist is unlikely to be the person writing the code. Rather, the musicologist’s likely role will be to determine the nature of the research question and identify the theory that allows an answer to that question. The ability to do this depends not on the familiarity with the syntax of any specific programming language but, rather, on an understanding of the ways that programs and data structures generally tend to work. AI makes it easier to develop this understanding by removing some real or imagined barriers between musicological curiosity and digital answers. The key relationship between AI and musicology in this regard is that AI limits the initial need for detailed technical knowledge where the technique is simply a means to finding results rather than the source of those results. I would expect that this same utility would extend beyond the field of digital musicology, which is my focus here.

I would also like to explore the potential applications of AI for solving specific problems in music analysis. While digital musicology is a thread that joins the following with the preceding, here AI’s function is distinct from its use as a reference, enabler, and drafter of first resort.

My specific field is the analysis of late-fifteenth century polyphony, particularly the cantus firmus mass. These masses are an almost perfect testbed for ideas of computer-assisted analysis as they typically comprise only four voice parts, contemporary counterpoint teaching might almost be designed for use as a data structure, and modern tools such as Music21 make it straightforward to measure the former against the latter. While I will discuss analytical problems occurring in this repertoire in detail and concentrate on my own situation, I am confident that the concepts discussed can be applied to a much wider range of analytical problems, particularly in cases where it is possible to describe music in terms of simple theoretical structures.

The problem that confronts me as an analyst of this repertoire is not that I don’t have a theory about the compositional structure of this music. I do have a theory, but that theory is only loosely defined: in broad terms it predicts that there will be certain rules that apply to different contrapuntal relationships but is presently unable to state or even to ascertain these rules. I expect that this is a data analysis problem, which in theory is well suited to computer-assisted treatment.

A necessary first step is solving some subsidiary problems. These are issues in analysis, but it is not clear how far analysis itself can resolve them. At the front of the queue is the matter of identifying cadences by machine, since the theory understands four-voice composition as a chain of cadential pairings to which accompaniment of the remaining voices is then added. My research to this point leads me to expect some form of cadence at, for example, each entry and departure of the tenor voice. In the accompanying excerpt from Ockeghem’s Missa L’homme armé, the first and last cadences are clear, while cadences at other points are ambiguous, at times overlapping and potentially contradictory.

One consequence of cadence as the basic articulating structure of polyphony, however, is that composers become interested in hiding it; while many cadences are easily recognized, others are less obvious, concealed behind avoided progressions, rests, and other obscuring devices. This desire to conceal makes it practically impossible to identify cadences through a comprehensive definition of cadential structure, since as soon as a progression becomes cadential, it too may need to be concealed. Such definitions may also be defeated by any unorthodoxy in the composition. Any attempt at an exhaustive description of cadential form thus seems doomed to failure.

As a human analyst, I find this situation vexing, as I believe I will tend to identify cadential structure in ways that better satisfy the requirements of my pet theory. A potential solution to this problem presented itself, however, when I read David Huron’s comment that computer-assisted auditory scene analysis—that is, identifying and recognizing discrete sources of sound within a given audio environment—depended largely on machine learning.1 Could a similar approach solve the problem of automatic cadential identification?

Machine learning, particularly a technique known as supervised learning, operates by training an algorithm on a corpus of prepared examples where the features to be identified are marked in advance. Over the course of the training, the aim would be to have the algorithm extrapolate from this marked data to recognize unmarked examples of the same phenomenon. The fact that cadential structure in my sample repertoire exists on a continuum of obviousness, from unmistakable to highly obscure and ambiguous points to the promise of this approach: training could begin with obvious cases and move gradually to the more complex. Using supervised learning comes with the need to monitor the type of supervision carefully in order to avoid simply implementing one’s personal prejudices in code, but the approach seems promising.

Cadential identification is just one example of the type of analytical problem that might be amenable to AI-assisted resolution. Another example is the ability to identify decorative consonance in contrapuntal textures. Both these cases are matters of preparing the data for analysis, however, with the goal of identifying an underlying note-against-note contrapuntal network in four-voice texture. The final task of my notional analytical program would be to explore a large body of this data, first to infer the rules of compositional behavior, a process analogous to Huron’s manual identification of voice-leading rules from Baroque chorales. Here, machine learning offers a means of identifying patterns in data that correspond to Huron’s preference rules, leading to a sophisticated model of compositional style against which further compositions can be measured.

The imagined result of solving these problems is the creation of an analytical piece of software that is able to read a piece of digitized polyphony, examine its entire contrapuntal complex and produce an analysis of its compositional structure, including the ways in which differs from similar works. AI would certainly have a role in this process, since its ability to traverse large bodies of data would allow it to reach these conclusions, while every analytical exercise would be one more piece of training data. It would be amusing, for example, to see how accurately this combination of AI and analysis could identify the composer of a sample piece. In the long run, interesting possibilities lie in that direction.

As I mentioned above, my experience in working with AI is limited, and while I am generally skeptical of AI’s ability to produce anything interesting or creative, I do believe in its potential to automate the drudgery out of much research work. Even if this may not represent a great saving in time or effort, it exchanges the drudgery for more interesting work, swapping writing code for thinking of interesting questions. For someone like myself, that is an exchange worth making. Now, though, you will have to excuse me; I’m off to see whether my AI assistant can write a machine-learning algorithm.