| Tasty byte-size provocations to refuel your thinking! | Brought to you by: |

Musicology in a Time of Technological Transformation

Musicology and the Mechanical Eye

New technologies of writing have always complicated the relationship between authors and readers. This is especially true of the performing art of music. From the beginnings of Western musical notation to the advent of the printing press, sound recording, and now the digital domain, new technologies of transcription brought about means for controlling the effects and purposes of music, even inaugurating a new sense of it as intellectual property. Each was a “new medium” of its day, and each brought with it new ways for composers, performers, and listeners to interact around musical ideas. Now it is transforming scholarship, too, particularly with the arrival of Large Language Models and the uncanny knowledge they seem to reveal.

What might such change mean for musicology, and the musicologists who make it? We once viewed print as the durable means through which we put our best ideas before colleagues and the wider musical public. Now digital texts erode critical authority while simultaneously heightening the need for us to take responsibility for the claims we make. These days almost anyone can create a digital archive to store and distribute the “flat” graphical symbols of standard musical notation (a PDF file will do). Indeed, resources like IMSLP or the CPDL are already heavy with hundreds of thousands of both historical documents and home-grown editions. The authoritative Urtext and error-laden mess are offered up as interchangeable versions of the truth.

The same medium nevertheless also obliges us to take responsibility for our decisions in newly transparent ways. Thanks to structured encodings known as XML (eXtensible Markup Language) one scholar can make a transcription of a text, but another can add variants readings, make annotations, or reconstruct missing parts without ever effacing the original work. Unlike the real thing, these digital palimpsests are infinitely reusable but also indefinitely recoverable. They are held up as the acid-free paper of the computer age.

There already is a shared XML standard for historical and literary documents: it is called Text Encoding Initiative (TEI), and it has been used for almost any kind of project one can imagine: transcriptions of thirty-two copies of the quarto edition of Hamlet, or dynamic editions of thousands of seventeenth-century English broadside ballads. For the last decade there has been a similar standard for music, the Music Encoding Initiative (MEI). It is not a tool for engraving music; rather, it is a structured standard that accommodates the full range of bibliographical and critical details that are essential to any scholarly approach to musical texts—it keeps the data (the notes) together with the metadata (where they came from, who selected them), all in a way that remains open to reuse, inquiry, and study.

Standards like MEI can record a large vocabulary of nuanced elements traditionally associated with the scholarly critical edition: variants, emendations, reconstructions, and even information about who is responsible for them. XML nevertheless sits at the fringes of Data Science as it is taught today, despite its flexibility for digitally encoding textual and musical artifacts to support both online presentation in digital editions and musicological inquiry through computational analysis. Many students, even those with dual computational and musical interests, remain unaware of its significance let alone how to work with it effectively. No doubt our collective capacity with these kinds of tools will increase in the years to come, as we look increasingly at the need for sustainable, reusable, and interoperable texts in a resource-constrained context. But to this we must now also add a potent new possibility to the musicological mix: Large Language Models and the artificial intelligence they make possible.

Large Language Models and the Futures of Scholarly Inquiry

The rapid advancement of Large Language Models (LLMs) in the last two years builds on decades of research into machine learning and monumental leaps in computation. Many of us are now familiar with (and worried about) the generative possibilities of these technologies, as they are used to write emails, compose or summarize prose, and even create images or videos, sometimes displaying an alarming tendency to hallucinate uncanny falsehoods. Tools used the wrong way can be dangerous or destructive, but now more than ever we must cast a critical, human eye over their mechanical limitations. Avoiding LLMs is already close to impossible. The challenge will be learning how to use them effectively to make the most of the unprecedented opportunities they offer for new modes of research. Here we explore two areas that are focal points in our own work as researchers and teachers: data about music (the structured representation of claims concerning works, performances, and the people who make them), and music as data (the structured representation of symbolic music scores). In each we plan to use LLMs to investigate textual or musical sources that we supply, rather than simply letting the model look to the endlessly unreliable internet in formulating its responses.

Case 1: Data About Music

The capabilities of LLMs can be enhanced with Retrieval Augmented Generation (RAG), a pattern whereby we search a large database or other corpus of documents for information relevant to any query we want to answer. These retrieved documents are then passed to the LLM along with our query. This approach helps to address the challenge of hallucination, because the LLM is asked to give responses based on the documents retrieved rather than its own internal representations. We might, for instance, query a corpus of eye-witness testimonies (e.g., from a historical newspaper) about musical performances for clues about the events themselves or the values of those who listened. Or we might query theoretical writings about music for insights about how the writers of those treatises understood musical language.

Part of the power of these RAG-based question-answering systems lies in the common use of new ways of storing and searching text in vector databases. The documents that we want to search are first broken into chunks (e.g., of 500 characters), and then converted to numeric representations, vectors, typically long sequences of numbers, through an “embedding model,” that are then stored in the database. These vectors capture semantic meaning in a way that is much more nuanced than storing lists of words, representing words in a mathematical space that puts (to take a hypothetical example) the concepts of consonance and dissonance at the same distance and direction apart as the words order and disorder. Once modeled in this way, our system can search out not just keywords but the concepts that animate them. Significantly, such databases seem surprisingly capable of retrieving relevant results for a query even when there are many imperfections in the text being searched, as is often the case when working with historical documents that have been digitized using Optical Character Recognition (OCR) software, which often mistakes letters here and there. Thus, combining a LLM with a vector database, these RAG systems can effectively provide responses based on documents relevant to the intent of a query. Their application to musical inquiry has only just begun.

In addition to qualitative reports about our corpus (prose summaries, hints about key values, or change over time), we could also produce structured responses. That is, our tools could attempt to identify and categorize the people, places, ideas, and sounds described in these texts, and in turn present them in a format already suited for inclusion in a structured database or in tabular format for subsequent data analysis. The usually tedious creation of TEI documents is one area where LLMs have enormous potential to help identify and mark up textual elements of interest. We might at first recoil from the idea that machines rather than humans would be doing the work of reading on our behalf, but if deployed carefully these technologies promise to unveil new insights without the need to fund thousands of hours of work to hand annotate every document.

Conversely, LLMs can be used to facilitate access to existing structured data of a sort found in many databases. Normally, researchers interact with such databases through graphical user interfaces (such as forms or search boxes) or programmatically through Application Programming Interfaces (APIs). Some digital databases, known as Linked Open Data repositories, are designed to be accessible through specific formats that computers can understand. These databases have special API access points, or endpoints, which allow users to search for data using a specialized query language known as SPARQL. However, the rules for forming such search queries in SPARQL (the “syntax”) are complex and remain impenetrable for those without special training. Fortunately, LLMs offer the opportunity for creating natural language interfaces, which let users ask questions in plain, everyday language. The system then converts these questions into structured SPARQL queries, making it easier to retrieve information from the database.

Case 2: Music as Data

Our experience with creating MEI encodings of Renaissance music offers another set of possibilities for the use of LLMs. Renaissance singers were used to figuring out many things for themselves. Sometimes, musicians had to supply unwritten sharps and flats in order to make the music sound as it should. Therefore, when modern editors approach this kind of situation, they take care to indicate the accidentals above the staff, making explicit both the intended musical result and the fact that the modern editor was the one responsible for it. MEI encodings of such passages record these interventions in logical terms that make it unequivocally clear that the <accid> tag (as it is formed in this standard) has been <supplied>, together with the reason for it. Armed with a corpus of such editions we could pass our files to a LLM along with instructions about where to look for these interventions and for the statements of editorial responsibility found in the headers of the MEI file. In turn, we could query our own encodings about editorial practice or the need for musica ficta in different composers, genres, or decades.

Could we also leverage the capacity of LLMs to tell us something about the style and structure of encoded scores? On one hand the challenges are significant: XML encodings of the sort described are incredibly verbose—it takes hundreds of lines of code to represent what can be seen in a few pages of score. Moreover, whereas a human reader can easily see the relationship among successive and simultaneous notes on the page, XML encodings are arranged logically (not graphically) with the result that the last note of one bar in a given voice might be hundreds of lines of code away from that voice’s first note in the next bar. Finding simultaneous pitches is no less complex, requiring code to interpret the time codes for all events in the file. The solution, as we found in a recent experiment, is to lend our LLM something of a helping hand, in this case by first turning our XML scores into “streams” of notes and then into lists of melodic, harmonic, and contrapuntal patterns. We do this with the help of the Python programming language, a widely used way of working with all kinds of data (texts, numbers, etc.). Python commands are assembled into functions (a routine to find and count all the pitches in an XML file, for example). The functions are in turn bundled into libraries that be installed on any computer (or run in the cloud). Key for our work has been the Music21 library (it does all the heavy lifting of finding notes, durations, intervals), which in turn serves as a foundation for CRIM Intervals, a library that we developed for “Citations: The Renaissance Imitation Mass (CRIM),” a project devoted to the idea of borrowing, citation, and similarity in sixteenth-century counterpoint. But we wondered: what would a LLM make of the information we can extract from XML in this way? And so, as an experiment, we took the diatonic melodic intervals from just the right-hand part of one of J. S. Bach’s famous Two-Part Inventions, no. 10 in G Major, BWV 781.

© 2023 Knute Snortum. This work is licensed under the Creative Commons Attribution-ShareAlike 4.0 International License.

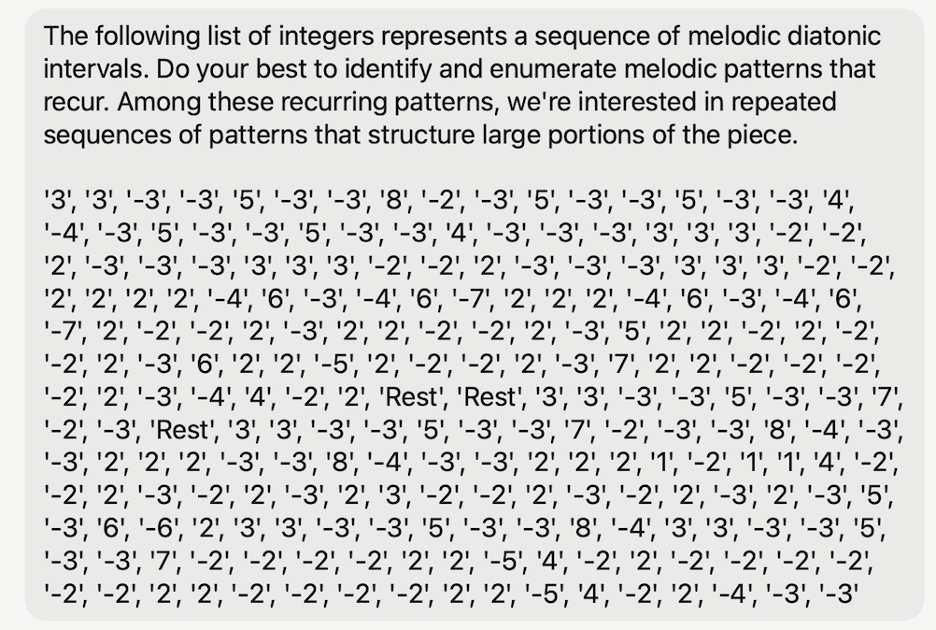

We used our CRIM Intervals tools to turn this score in to a long list of integers (and without information about durations). We then asked ChatGPT a series of questions about our list: Could it identify key repeating patterns and begin to analyze the structure of the piece? Could it turn the patterns back into some kind of XML so we could review them in standard symbolic notation? Could it guess the genre, style, or even composer?

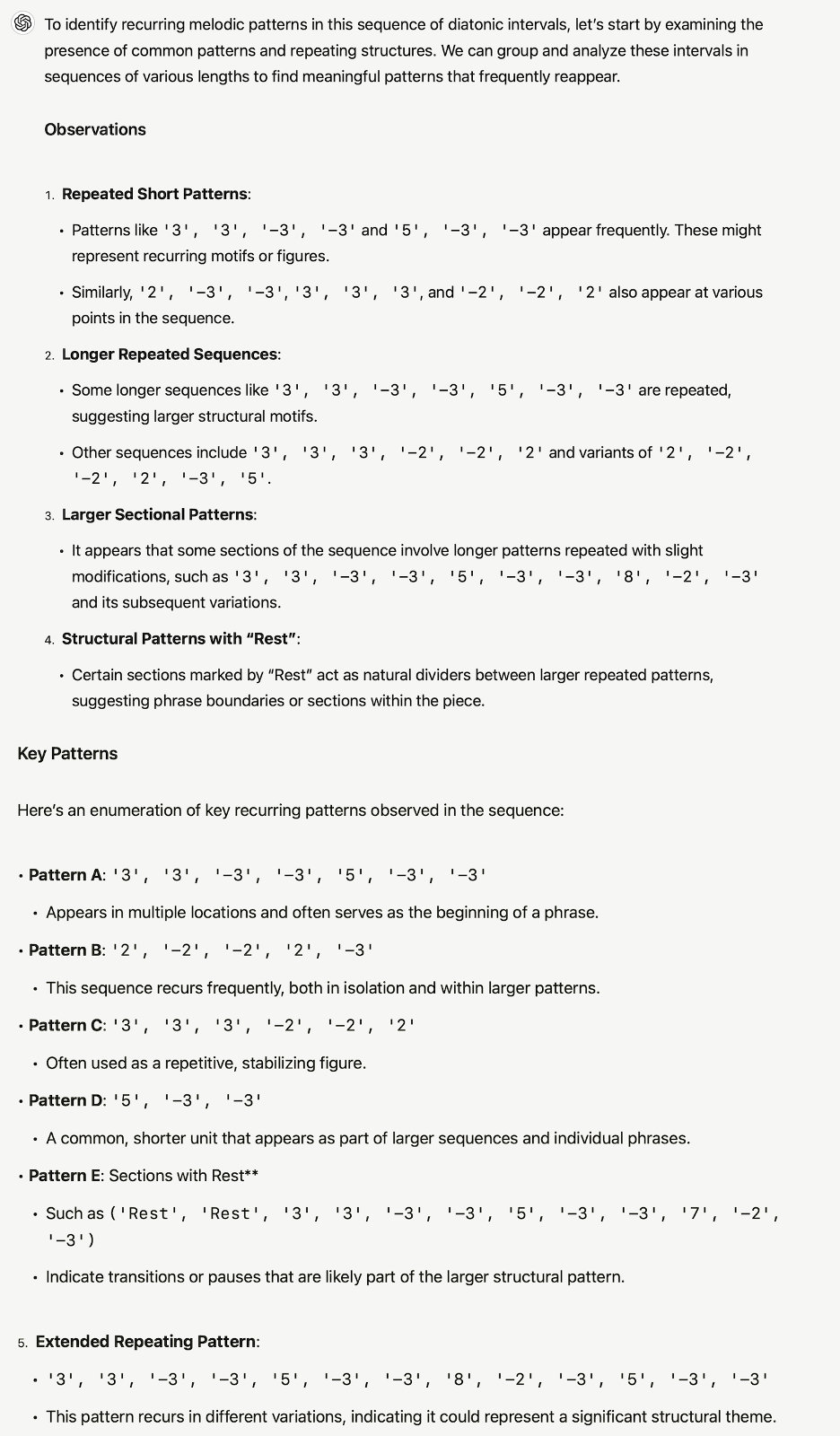

Here are some screenshots from our conversation with ChatGPT:

And here is the response! Remember that we did not in fact tell ChatGPT anything about which of these patterns interested us. We only asked that it find them on its own, based on everything it has already learned about musical intervals and analysis.

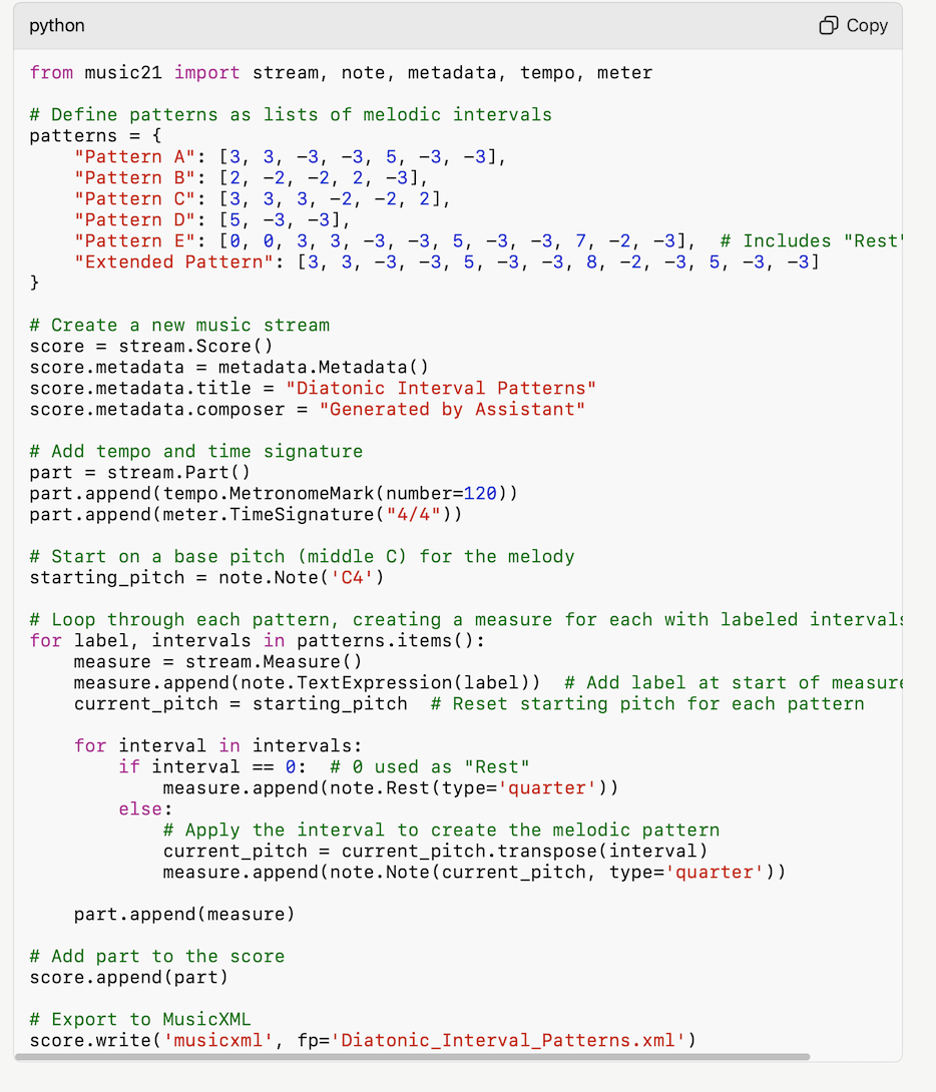

These patterns seemed interesting, but it would be good to have them presented in a more human-readable form for review. So, we asked ChatGPT to create a MusicXML encoding from the string of integers, assuming a fixed rhythmic value for each, and with the patterns identified so that we could open these in a score editor for review. It also did so, providing the Python code needed to create the file, which we then opened in mei-friend and Sibelius. In effect, the machine both found the examples and then wrote the code that could turn them back into notation, all on the basis of what it had already read and learned about the workings of musical encoding.

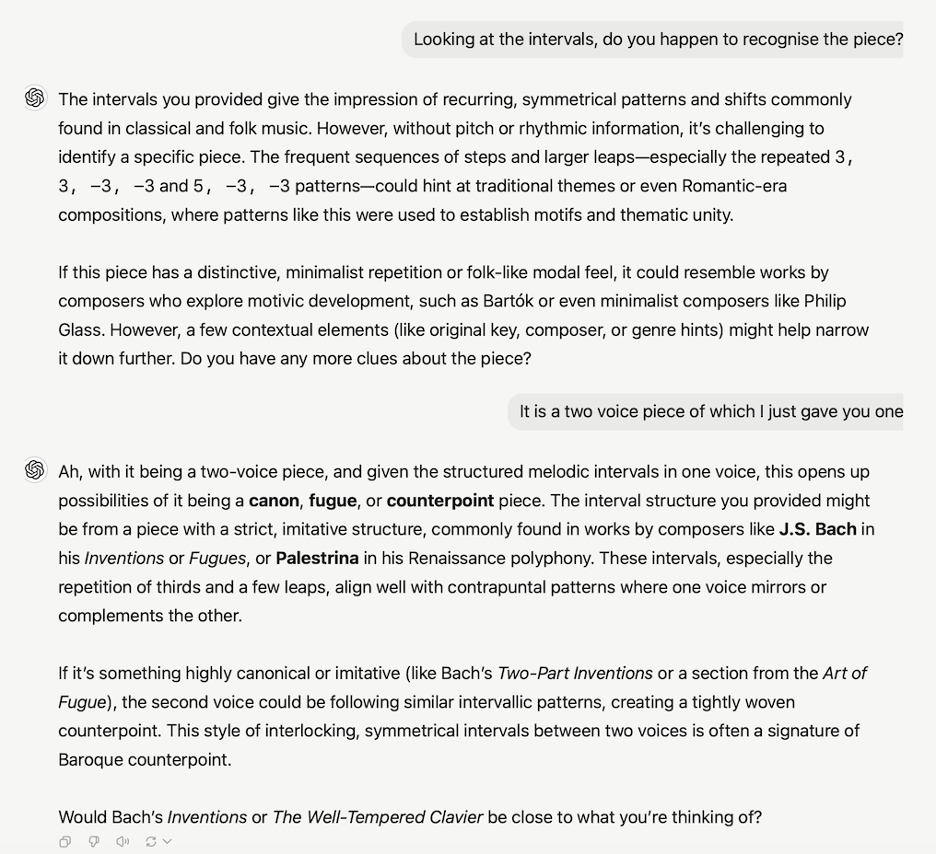

We then wondered whether ChatGPT could recognize the piece. At first it hedged, allowing that without exact pitch or rhythmic information, it could not be very accurate. It did venture, however, that the piece, with its steady repetition of a relatively limited number of patterns, might be either folk music, a minimalist composition (like one by Philip Glass), or by another composer who favors the close development of local patterns (like Béla Bartók).

We then explained what we had previously failed to say: this is “one part from a two-voice piece.” “Ah,” it replied (one of the typically uncanny responses that such LLMs produce). But then it did something even more uncanny. It surmised that it was in a contrapuntal or fugal style, perhaps by Palestrina, or more likely some example of interlocking, contrapuntal music from the Baroque. “A two-part invention by Bach?,” it surmised? We were astounded by the uncanniness of the response. Of course, the LLM did not “think” (at least in the way a musicologist expert in the style might), but it did find that “Bach” and “two-part invention” were somehow in the same vectorized representation of language as systematic repetition and counterpoint. Beyond this we cannot say.

Looking Ahead

What are these sorts of projects likely to mean for our lives as scholars? In recent decades the old solitary humanist burrowing away in their library carrel in search of unique insights has already become if not an endangered species, then at least a more wary one, as LLMs make possible kinds of readings that could never have been undertaken by a single scholar, even over an entire career. Digital skeptics worry that the machine eye (and ear) will soon replace the human one. But we need to remember that algorithms seem uncanny precisely because they play back our all-too-human ways of thought and expression. Thus, the key will be to inform ourselves about how these machines work (and how they fail). The patterns they uncover in this way are not the answers, they instead prompt us to formulate new questions. They demand human hypothesis and interpretation. Our roles are changing once again, and it will be up to us whether we play our instruments, or they play us.